Server Configuration

Server Access port configuration

Server access ports typically fall into three categories:

Server Access port configuration

Server access ports typically fall into three categories:

- Normal servers which require simple gigabit connectivity with fail on fault cards (what HP calls Network Fault Tolerance – NFT)

- High bandwidth servers which require two gigabit throughput using aggregation

- VMWare servers which require special configuration

Nowadays auto-negotiation of speed and duplex works well with server gigabit interfaces so do not try and set the speed or duplex manually. One reason is auto-negotiation enables the cable-tester built into some gigabit Ethernet modules to function.

For example:

switch#test cable-diagnostics tdr interface gi1/2/1

TDR test started on interface Gi1/2/1

A TDR test can take a few seconds to run on an interface

Use 'show cable-diagnostics tdr' to read the TDR results.

TDR test started on interface Gi1/2/1

A TDR test can take a few seconds to run on an interface

Use 'show cable-diagnostics tdr' to read the TDR results.

switch#show cable-diagnostics tdr interface gi1/2/1

TDR test last run on: August 06 13:58:00

Interface Speed Pair Cable length Distance to fault Channel Pair status

--------- ----- ---- ------------------- ------------------- ------- ------------

Gi1/2/1 1000 1-2 0 +/- 6 m N/A Pair B Terminated

3-6 0 +/- 6 m N/A Pair A Terminated

4-5 0 +/- 6 m N/A Pair D Terminated

7-8 0 +/- 6 m N/A Pair C Terminated

TDR test last run on: August 06 13:58:00

Interface Speed Pair Cable length Distance to fault Channel Pair status

--------- ----- ---- ------------------- ------------------- ------- ------------

Gi1/2/1 1000 1-2 0 +/- 6 m N/A Pair B Terminated

3-6 0 +/- 6 m N/A Pair A Terminated

4-5 0 +/- 6 m N/A Pair D Terminated

7-8 0 +/- 6 m N/A Pair C Terminated

If a server comes in at 100 Mbps and the server is also set to auto/auto, it is likely that there is a cable fault (gigabit requires all pairs to be terminated where 100 Base-T does not).

Access ports should also be set to spanning-tree portfast as per established practice.

Port-security is also worth mentioning as it is NOT compatible with dual-homed servers using HP’s network teaming software. Any cable fault on NIC 1 results in the MAC address shifting over to NIC 2’s port and the switch sees this as a security violation, blocks traffic and generates this syslog message.

Normal ServersSwitch Configuration

interface <interface name>

switchport

!Set an access VLAN

switchport access vlan <###>

!Force access mode

switchport mode access

!Set an acceptable broadcast storm level

storm-control broadcast level 0.10

!Port-security is not compatible with dual-homed servers

no switchport port-security

no switchport port-security maximum

no switchport port-security violation restrict

spanning-tree portfast

end

switchport

!Set an access VLAN

switchport access vlan <###>

!Force access mode

switchport mode access

!Set an acceptable broadcast storm level

storm-control broadcast level 0.10

!Port-security is not compatible with dual-homed servers

no switchport port-security

no switchport port-security maximum

no switchport port-security violation restrict

spanning-tree portfast

end

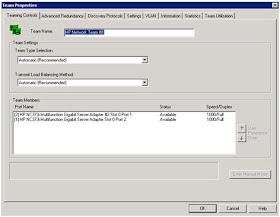

Server configuration

The default configuration on HP servers for a teaming interface is Type: Automatic and Transmit: Automatic. This configuration will, on non-etherchannel switch ports, default to Transmit Load Balancing with Fault Tolerance (TLB). One NIC will transmit and receive traffic whilst the other will only transmit.

From a network point of view this makes troubleshooting difficult, as transmit traffic is spread over two NICs with two MAC addresses and receive traffic is directed to just one NIC depending on what NIC responds to ARP requests.

Our PREFERRED configuration is to use either:

|

| NFT Teaming Configuration |

|

| NFT Teaming with preference configuration |

Two servers that are known to exchange a lot of traffic with each other but do not use Etherchannel should use NFT with preference and ensure that the active NICs on both servers go to the same switch.

High Bandwidth Servers

Switch Configuration

Note: most settings MUST match between all ports in the same Etherchannel group (e.g. storm-control; access mode; and vlan).

interface <interface name> switchport

!Set an access VLAN switchport access vlan <###>

!Force access mode switchport mode access

!Set an acceptable broadcast storm level storm-control broadcast level 0.10

!port-security is not compatible with channelling no switchport port-security

no switchport port-security maximum

no switchport port-security violation restrict

!Force LACP & enable as passive mode channel-protocol lacp

channel-group <#> mode passive

spanning-tree portfast !Force flowcontrol off to stop any channelling issues

!Intel cards default to no flow control; HP on-board default to on

flowcontrol receive off

flowcontrol send off

end

!Set an access VLAN switchport access vlan <###>

!Force access mode switchport mode access

!Set an acceptable broadcast storm level storm-control broadcast level 0.10

!port-security is not compatible with channelling no switchport port-security

no switchport port-security maximum

no switchport port-security violation restrict

!Force LACP & enable as passive mode channel-protocol lacp

channel-group <#> mode passive

spanning-tree portfast !Force flowcontrol off to stop any channelling issues

!Intel cards default to no flow control; HP on-board default to on

flowcontrol receive off

flowcontrol send off

end

Sample output showing two links being aggregated:

switch#show int po100 etherchannel

Port-channel100 (Primary aggregator)

Age of the Port-channel = 1d:01h:38m:34s

Logical slot/port = 14/4 Number of ports = 2

HotStandBy port = null

Port state = Port-channel Ag-Inuse

Protocol = LACP

Fast-switchover = disabled

Ports in the Port-channel:

Index Load Port EC state No of bits

------+------+------+------------------+-----------

1 FF Gi1/2/1 Passive 8

0 FF Gi2/2/1 Passive 8

Time since last port bundled: 0d:00h:00m:05s Gi1/2/1

Time since last port Un-bundled: 0d:00h:00m:33s Gi1/2/1

Server configuration

The default configuration on HP servers for a teaming interface is Type: Automatic and Transmit: Automatic. This configuration will attempt to negotiate an etherchannel using LACP and if this fails to use Transmit Load Balancing (TLB). As long as the port-channel and its corresponding physical interfaces are configured correctly the default configuration seems to work well. Although TLB is not our preferred failback connection type, there does not appear to be a way to enable channelling with NFT fallback.

|

| Default Teaming Configuration |

|

| Successful LACP negotiation |

|

| Unsuccessful LACP negotiation |

Other features such as duplex/speed and flowcontrol are best left at defaults.

Jumbo Frames

Jumbo frames may improve performance of some applications, but no testing has been done at the time of writing to verify whether they introduce problems either locally or to remote users on a 1500 byte MTU WAN connection or whether they do indeed improve performance as much as some would believe. http://www.nanog.org/mtg-0802/scholl.html may be useful reading.

Jumbo frames are also incompatible with HP’s TCP Offload Engine (TOE) NICs so jumbo frames may suffer from reduced throughput. More testing and investigation will be required before coming to any firm conclusions or recommendations. Therefore at the current time, our recommendation for host access ports is to use a standard 1500 byte MTU / 1518 byte frame size.

However, since every trunk link on a LAN has to support the highest MTU, it is worth building the LAN’s trunk links to support a high MTU even if the access ports still run at 1514 bytes. This leaves the option open for later adoption at the host layer and allows easy adoption of some devices that require a high MTU such as Fibrechannel over IP.

It was so useful...

ReplyDeletePlease post more documents..its really useful..will need to promote this site

ReplyDelete

ReplyDeleteryzen threadripper 3970xvd c cas

ReplyDeleteamd ryzen 3970x

vdx caz